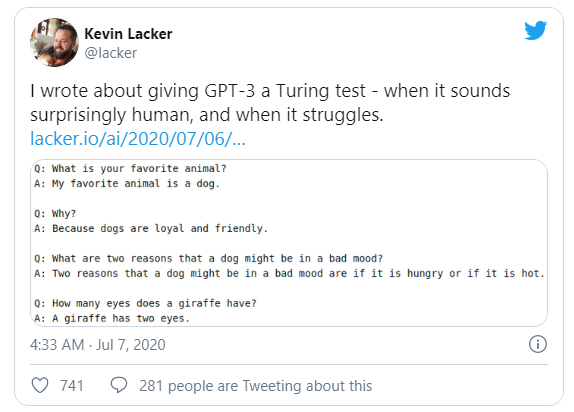

GPT-3 models are language models developed by OpenAI that use deep learning algorithms to generate human-like text. These models are trained on vast amounts of data and can generate various outputs, from completing text prompts to translating languages. GPT-3 models have gained significant attention for their ability to perform tasks such as language translation, chatbot communication, and even writing coherent articles. In summary, GPT-3 models are advanced language models that use artificial intelligence to generate human-like text, and they have a wide range of applications in various industries.

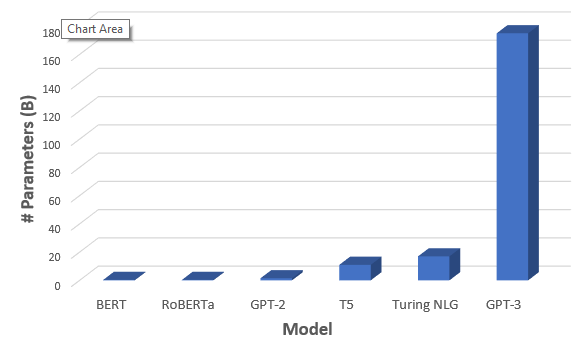

GPT-3 (Generative Pre-trained Transformer 3) is considered to be better than other AI models due to its size, architecture, and training data.

- Firstly, GPT-3 is much larger than its predecessors, with over 175 billion parameters, making it the largest language model ever created. This vast number of parameters allows GPT-3 to capture more complex language patterns and generate more human-like responses.

- Secondly, GPT-3 uses a transformer architecture optimized for natural language processing. The transformer model employs a self-attention mechanism that allows it to focus on the most important parts of the input text and generate more coherent responses.

- Lastly, GPT-3 has been trained on extensive data from diverse sources, including books, articles, and web pages. This vast training corpus allows GPT-3 to have a broad understanding of language, making it capable of generating high-quality text across various tasks and domains.

Overall, its large size, optimized architecture, and extensive training data make GPT-3 one of the most impressive AI models for natural language processing.

Building a GPT-3-powered application typically involves the following steps:

- Define the problem and requirements: Determine your application’s specific use case and requirements. Decide what kind of input and output your application needs and what tasks you want it to perform using GPT-3.

- Gather and prepare data: Gather and prepare the relevant data that you will need to train and fine-tune your GPT-3 model. Moreover, this may include text data from various sources, such as websites, social media, and other documents.

- Choose a GPT-3 API provider: Choose a provider that offers access to the GPT-3 API, such as OpenAI, and set up an account.

- Integrate the API: Integrate the GPT-3 API into your application by following the documentation provided by your chosen provider. In addition, this may involve using APIs or SDKs, depending on the provider.

- Fine-tune the GPT-3 model: Fine-tune the GPT-3 model using the data you gathered in step 2 and train it to perform the specific tasks required by your application.

- Test and evaluate: Test your GPT-3-powered application to ensure it performs correctly, and evaluate its performance against your defined requirements.

- Deploy and monitor: Deploy your application to production, and monitor its performance and usage over time. Continuously improve and update the application to ensure it remains effective and efficient.

Building a GPT-3-powered application requires advanced skills in machine learning, natural language processing, and software development. Working with a team of experienced professionals or consulting experts in these fields may be helpful.

Some of the applications of the GPT-3 model include:

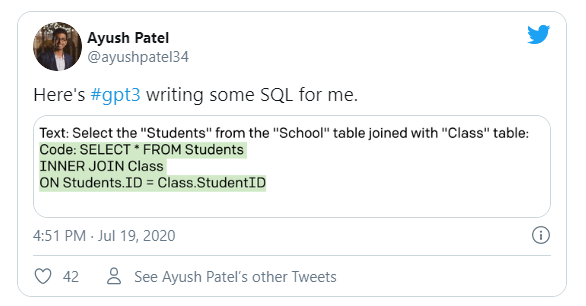

- Chatbots: GPT-3 can be used to develop chatbots that can converse with users naturally and intelligently.

- Content creation: GPT-3 can generate high-quality content such as articles, essays, and product descriptions.

- Language translation: GPT-3 can be used to develop language translation tools that can accurately translate text from one language to another.

- Personal assistants: GPT-3 can create virtual personal assistants to schedule appointments, set reminders, and answer queries.

- Sentiment analysis: GPT-3 can analyze text sentiment, helping businesses and organizations understand customers’ feelings about their products and services.

- Text completion: GPT-3 can be used to complete text in a way that matches the tone, style, and content of the original text, making it useful for tasks such as writing emails and reports.

- Question answering: GPT-3 can be used to answer user questions, making it useful for educational and informational purposes.

- Game development: GPT-3 can be used to develop games that require natural language processing, such as text-based adventure games or puzzle games.

Developing an application that utilizes GPT-3’s advanced capabilities can be expensive, depending on the app’s scope, complexity, necessary integrations, platform support, and the development team’s expertise. Additionally, using the GPT-3 API incurs a cost usually charged per request or as a monthly subscription based on usage and the API provider.

If you need a precise estimate for developing an application that includes GPT-3, please do not hesitate to contact us. Our team of experts will be happy to provide you with a thorough evaluation of the project’s requirements and expenses.

The costs related to developing a GPT-3 application can differ depending on several factors, such as the intricacy of the language model, the amount of data storage needed for hosting, and the level of advanced features required. Projects with more intricate and advanced features usually demand more time and resources from a bigger development team, resulting in higher overall costs for development.

BLOG

BLOG