Since you are here, I assume you have already realized the power of Large Language Models(LLMs) like GPT3. In this article, I explain how you can yield its power to put your business on steroids. I’ll explain prompt engineering and how you can fine-tune LLMs like GPT3 so that they can automate many of your business tasks. I’m not talking about generic tasks like asking ChatGPT to write a recommendation letter, but getting LLMs to automate tasks like creating a 2-year sales forecast of your business or creating technical documentation of your products. And, of course, completing these tasks in a matter of seconds.

If you want a quick brush-up on the basics, my previous article, getting started with GPT3 can be a good guide. Also, if you have unanswered doubts and questions after reading this article, you can message me through the ‘Ask Author’ feature on the right side of this blog. Alright, so let’s jump into the topic.

Pre-trained machine learning models are already trained on humungous data. In the case of GPT3, it is estimated that the model was trained on a dataset of over 45 TB. However, GPT3 or other LLMs will not produce quality results if you want to use them for a domain-specific business use case. Because these models were not trained on the data specific to your business or business use case.

Fine-tuning is the process of training a pre-trained model on a specific task to produce the desired results. It involves tuning the pre-trained model on a new dataset relevant to the task. In this article, we’ll learn how to fine-tune GPT3 model using a process that involves prompt engineering.

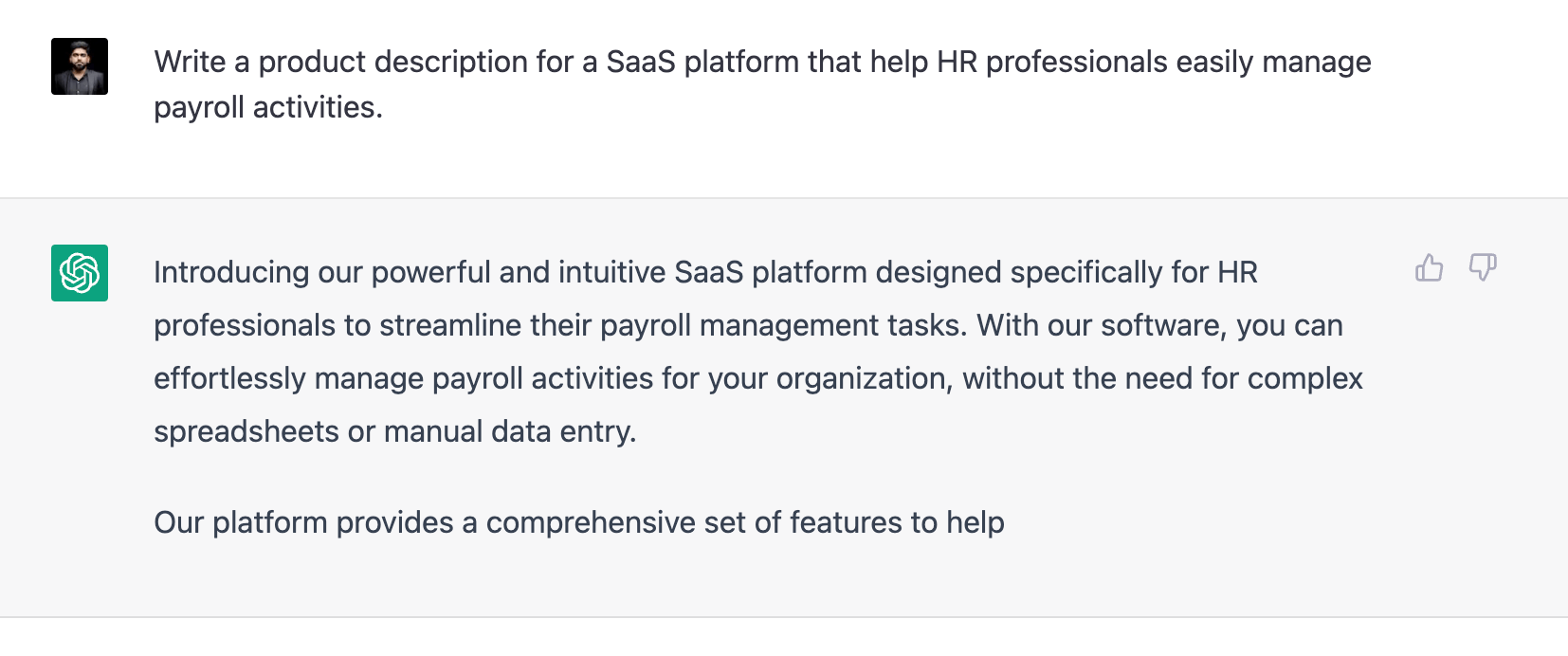

A prompt is an input you give to the model. It is a phrase that guides the model to generate the desired output. An example of a prompt and the response generated by ChatGPT is shown below;

Prompt engineering aims to design and optimize text-based instructions for language models to generate accurate and relevant outputs. Prompt engineering improves language models’ performance on specific tasks.

To fine-tune GPT3 for a domain-specific task, you must provide additional data to the model, the domain-specific data, and design prompts to generate the desired results. The steps are as follows,

Prepare training data: First, create a text dataset relevant to the task you want to fine-tune. The more diverse the data, the better the fine-tuning. After creating the dataset, you need to preprocess it. The preprocessing steps depend on your dataset’s format, content, and task. It is the process of cleaning and transforming raw data into a format that can be used for analysis. It typically involves data normalization and feature extraction. By careful prompt designing, you must also create a set of relevant prompts for the task you need GPT3 to execute. The prompt should be relevant to the dataset you prepared in the previous step. After this step, you have enough data to create the training dataset. The format for the training dataset is shown below.

{"prompt": "<prompt text>", "completion": "<desired generated text>"}

{"prompt": "<prompt text>", "completion": "<desired generated text>"}

{"prompt": "<prompt text>", "completion": "<desired generated text>"}

...

Fine-tuning: Call the fine-tune API by inputting the training data you created in the previous step to start the fine-tuning of the model. Once the fine-tuning is completed, you will receive the details of the fine-tuned model as the output. Evaluate the performance of the fine-tuned model. If the results are unsatisfactory, iterate steps 1 and 2 by adding more relevant prompts, cleaning the training data, and adjusting API parameters until the results are satisfactory.

Suppose you’re running a pharmaceutical company and want to fine-tune GPT3 to generate descriptions for your products. The pre-trained model will fail to create the necessary descriptions for two reasons.

So, to meet your business requirement, you need to further train the model. Simply put, you must fine-tune the model with your data and prompts. To do this, you must create a training data set, as shown in the above format. To create a training dataset, you need a list of prompts and corresponding completions (desired results for the prompt).

Now, let’s see how to create the list of prompts and completions. The first step is to prepare a dataset that covers the required details about your products. Each product’s details should be a separate record in the dataset. A sample format is given below.

| Product Name | Type | Details |

| Paracetamol | Generic Drug | tablets,syrup |

| Doxycycline | Antibiotic | injection,tablets |

The next step, designing prompts, is a tricky part. How you design the prompts determines the model’s performance and generalization to execute your task. First, you need to define a specific format for the prompts. The format determines what all information should be included in the generated description and how to structure it.

Example for prompt format: Write a description of [product name], mention [type] and [details]

After defining the prompt format, you can generate a list of prompts using a simple program. The logic is that the variables in the prompt format will be replaced by the data available in the dataset. You need at least 200 prompts to fine-tune the model. Depending on the complexity of the task, you would need more prompts to fine-tune GPT3 model. A simple python code to generate prompts for the above dataset is given below.

product_data = [

('Paracetamol', 'Generic Drug', ['tablets', 'syrup']),

('Doxycycline', 'Antibiotic', ['injection', 'tablets'])

]

for product in product_data:

for detail in product[2]:

prompt = f"Write a description of {product[0]}, mention {product[1]} and {detail}"

print(prompt)

Example for generated prompts:

Write a description of Paracetamol, mention Generic Drug and tablets

Write a description of Paracetamol, mention Generic Drug and syrup

Write a description of Doxycycline, mention Antibiotic and injection

Write a description of Doxycycline, mention Antibiotic and tablets

After generating the prompts, it’s important to manually review a sample of the prompts to ensure they are sensible. Now you have the list of prompts required to fine-tune GPT-3. The first part of the training dataset is completed. The second part is the list of desired completions. You’ll have to create a list of descriptions for the prompts and use that for the training dataset. If your product’s info or the description you want GPT3 to generate is generic, then you can call GPT3 API to create completions for each prompt you created in the previous step. Make sure that the completion generated is satisfactory. If not, modify the prompt until completion is satisfactory. A sample consolidated code is given below.

import openai

import time

openai.api_key = "YOUR_API_KEY"

product_data = [

('Paracetamol', 'Generic Drug', ['tablets', 'syrup']),

('Doxycycline', 'Antibiotic', ['injection', 'tablets'])

]

for product in product_data:

for detail in product[2]:

prompt = f"Write a description of {product[0]}, mention {product[1]} and {detail}"

response = openai.Completion.create(

engine="davinci",

prompt=prompt,

max_tokens=1024,

n=1,

stop=None,

temperature=0.7

)

print(response.choices[0].text)

time.sleep(1) # to avoid rate limit

Now you have the required data to create the training dataset, as shown in the format below.

{"prompt": "<prompt text>", "completion": "<desired generated text>"}

{"prompt": "<prompt text>", "completion": "<desired generated text>"}

{"prompt": "<prompt text>", "completion": "<desired generated text>"}

...

The next step is to initiate the fine-tuning job using the OpenAI CLI:

openai api fine_tunes.create -t <TRAIN_FILE_ID_OR_PATH> -m <BASE_MODEL>

After you initiate a fine-tuning job, it may take significant time to complete. When the job is completed, you’ll receive the name of the fine-tuned model. There you go, you have a fine-tuned GPT-3 model capable of doing custom tasks.

Fine-tuning GPT-3 model involves training the model on a specific task or domain by providing it with a large amount of task-specific data. This allows the model to adapt and generate more accurate responses. There are several ways to fine-tune GPT-3 model, depending on the task and available data.

One approach is prompt engineering, which involves providing the model with specific prompts that guide its generation. This can be useful for tasks such as question-answering, where the prompt can be a question, and the model generates the answer. In this case, the prompt is designed to elicit the desired response from the model, and the model is fine-tuned on a large dataset of similar prompts.

Additionally, few-shot learning can be used to fine-tune the GPT-3 model by providing just a few examples of the target task. This approach involves selecting a small number of examples representative of the task and then fine-tuning the model on those examples. This approach is particularly useful when limited data is available for the target task. Overall, fine-tuning GPT-3 model involves providing it with task-specific data or prompts and using supervised or unsupervised learning techniques to optimize the model’s performance on the target task.